Understanding Differential Privacy

— Technical, Security — 7 min read

In this article, we will get an overview of resolving the privacy issue using differential privacy. You will understand the basics of how privacy is preserved in databases used for machine learning and deep learning. Although, understanding differential privacy requires a mathematical background, this article will cover a very basic overview of the concepts. We will also be coding a sample database and check if it is differentially private or not.

Table of contents

- Table of contents

- Introduction

- Differential Privacy

- Differencing attacks

- Implementing differential privacy

- Types of differential privacy

- Differential privacy in real life

- Conclusion

- Further reading

Introduction

In this digital era, most companies are data-driven. Our data is harvested to help keep growing their economic incentives, in exchange for services provided to us. Research institutions often use and share data containing confidential information about individuals. Improper disclosure of such data can have adverse consequences for a data subject’s private information, or even lead to civil liability or bodily harm related to physical or mental health.

In the late 2000s, Netflix ran a competition for building a recommendation system. To build it, they released a large dataset by anonymizing the data which didn’t leak the movie names or users' ratings. Two professors from the University of Texas de-anonymized them completely by comparing them with IMDB ratings, thus revealing the personal information about the dataset. This a great example of how data privacy has been compromised! Similarly, one such data breach of health records happened in 1997, where medical records of the governor of Massachusetts were identified by matching anonymized medical encounter data with voter registration records.

This sort of data leakage is very alarming to both the users as well as organizations. To resolve this, we can either completely randomize the way the data is sent, using keys that are only decipherable at either end (Differential Learning). An alternative, being federated learning, where we bring the model to the data instead of or vice-versa. For the scope of this article we will mainly focus on the concept of differential privacy to check for leakage in data.

Differential Privacy

Former definition

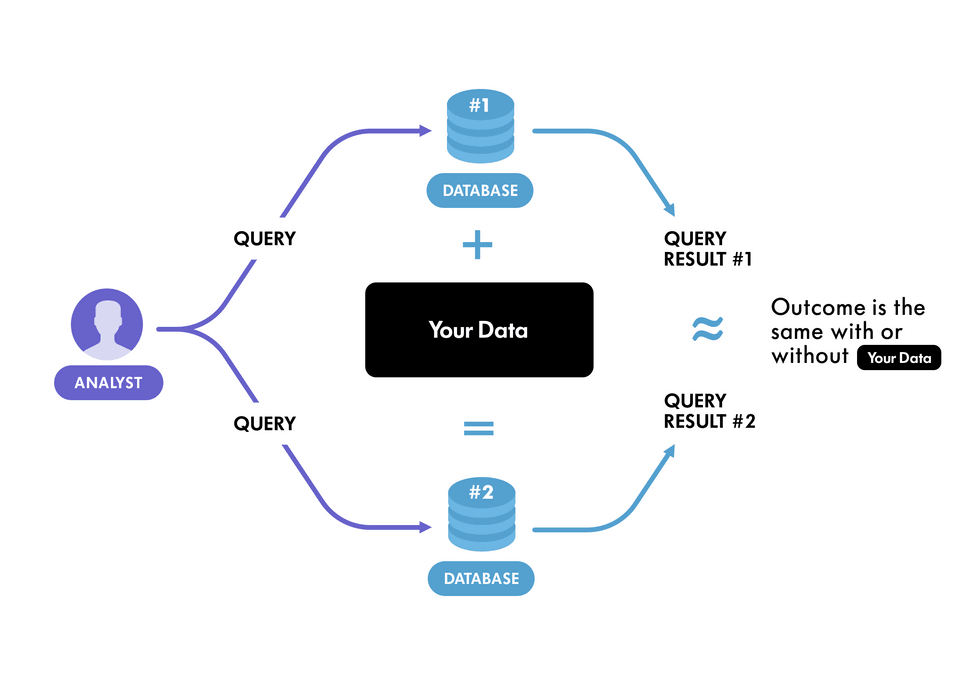

Anything that can be learned from a statistical database can be learned without accessing the database

This definition does not hold. We need to consider two things, first is people and the second is data. We need to protect people's rights while (correctly predicting) using their data. In certain cases, the whole database must be kept private, to protect the information, because there are chances of data leaks.

Modern definition

It’s a promise made by a data holder to a data subject, that “You will not be affected, adversely or otherwise, by allowing your data to be used for study analysis, no matter what other studies, datasets or information is available” - Cynthia Dwork (Godfather of Differential Privacy)

A simpler way to understand this modern definition is to go over an example:

Assume, we have a small database of 5000 entries with 0 or 1 as a value for each row, specifying certain property like 'people with cancer' as 1, and 'people without cancer' as 0. So, here our goal would be "Even if we removed detail of 1 person, the query of the database must not change” then the privacy of the information is protected. We will see its implementation very shortly.

Differencing attacks

To continue with the previous explanation, let's say we want to check if privacy has been preserved for every individual in the database, what we can do is:

- Find the sum of all the values before removal of 1 persons' data (the original values)

- Then find the sum of all values after removing that person (a new database with 1 missing value)

- On finding the difference between both the summations, we get the exact detail of that person thus we will know if he/she has cancer or not. This shows that the privacy of that individual has been leaked. This is one of the simplest differencing attacks, using a summation query.

Similarly, there are other types of differencing attacks using various other functions like mean(), median(), and so on. This measure of how much data is leaked through a query can be measured with sensitivity (evaluation of privacy leakage is measured in terms of sensitivity). In simple terms, it can be said as the largest possible difference for that one row, for any dataset. For our example, the sensitivity is 1, which means that adding or removing a single row from the dataset will change the count by at most 1.

Implementing differential privacy

Now, we are going to implement differential privacy for a simple database query using a summation differencing attack. The database has only 1 column with Boolean types. The Boolean type denotes if a person possesses some private attribute or not (for example, if a person has a particular disease or not). And, we are going to learn, if the database is differentially private or not.

Creating a database

Create a simple database containing 5000 entries consisting of values 0s and 1s. Here, we randomly generate binary values using random.choice()

1import random2

3 # the number of entries in our database4 num_entries = 50005

6 original_database = [] # The original database containing 0s and 1s7

8 for i in range(num_entries):9 original_database.append(random.choice([0,1])) # Generate random number from choice of 0 and 110

11 print(original_database)Output:

1Out[0]: [1, 1, 0, 0, 1, .., 0, 1, 1]To demonstrate differential privacy, we try to manually omit certain values from the database, and check if privacy is still preserved or not. So, we create a method to accept remove_index as an argument and remove it from the database.

1def create_database_with_missing_value(database,remove_index):2 database_before = database[0:remove_index] # List slicing till remove_index (0, remove_index)3 database_after = database[remove_index+1:] # List slicing after remove_index (remove_index + 1, len(original_database))4 return database_before + database_after # Concatenating both the lists5

6 create_database_with_missing_value(original_database, 3) # A sample output on removal of 3rd indexOutput:

1Out[1]: [ 1, 1, 0, 1, 0..., 0, 0, 0]Now, we create a set of such databases (parallel databases), where index i is removed from each of the databases.

1def create_set_of_databases(database):2 databases = list() # Contains lists of list - A set of databases3

4 for i in range(len(database)):5 new_database = create_database_with_missing_value(database,i) # Create a database after removing index i from it6 databases.append(new_database) # Append the new database to the list of databases7

8 return databases9

10 print(create_set_of_databases(original_database))Output:

1Out[2]: [[ 1, 1, 1, 0, 1, 1, 1, 1, 1, ...., 0, 0, 1, 0, 0, 1, 1, 0, 0, 0],2 [ 1, 1, 1, 0, 1, 1, 1, 1, 1, ...., 0, 0, 1, 0, 0, 1, 1, 0, 0, 0],3 [ 1, 1, 1, 0, 1, 1, 1, 1, 1, ...., 0, 0, 1, 0, 0, 1, 1, 0, 0, 0],4 .................5 [ 1, 1, 1, 0, 1, 1, 1, 1, 1, ...., 0, 0, 1, 0, 0, 1, 1, 0, 0, 0]]Next, we create a set of databases based on the users' input. Modularizing the previous snippets as create_database(num_entries)

1def create_databases(num_entries):2 3 original_database = [] # A list containing binary values4 for i in range(num_entries):5 original_database.append(random.choice([0,1])) # Generate random choices and append to the list6

7 databases = get_set_of_databases(original_database) # Create a set of databases, having 1 missing value in each8

9 return original_database, databases10

11 original_database, databases = create_set_of_databases(5000) # Create 5000 different set of databases with each database having 1 missing valueEvaluating differential privacy of a function

Seeing as we have created a sample database for demonstration of differential privacy. We should now be able to query the information and check if the query leaks private information or not.

As we mentioned before, the evaluation of privacy leakage is measured in terms of sensitivity. On iterating through each row of the database, we measure the difference in the output of the query.

Finding the mean and sum of all values in the original database (without removing values)

1# A query to find the mean of values in each of the databases2 def query_mean(db):3 return sum(db)/len(db)4

5 # A query to find the sum of values in each of the databases6 def query_sum(db):7 return sum(db)8

9 full_db_result = query_mean(db) # Store the mean10 print(full_db_result)Output:

1Out[4]: 0.5130Performing the query for all values in new database (each containing 1 missing value)

1def sensitivity(query,num_entries):2 original_db,dbs = create_db_and_parallels(num_entries)3 sensitive = 0 # Assume there is no leakage4

5 full_db_result = query_mean(original_db) # Query each new database6

7 for db in dbs:8 db_distance = abs(query_mean(db) - full_db_result) # Compare the new database with original database9

10 if(db_distance > sensitive):11 sensitive = db_distance # Measure if privacy has been leaked12

13 return sensitiveNow, let us compare how sensitivity varies for different differential attacks query.

1print('Sensitivity using Sum query:', sensitivity(query_sum, 5000))2 print('Sensitivity using Mean query: ', sensitivity(query_mean, 5000))Output:

1>> Sensitivity using Sum query: 12>> Sensitivity using Mean query: 0.00010106021204242532This demonstrates that the new set of databases containing 1 missing value in each of the databases has leaked information about the missing value. Thus, we can conclude that the privacy of that person has been leaked. The lower the value of sensitivity shows less privacy leakage.

Now, let's look at how the leakage can be resolved.

Types of differential privacy

Database administrators have all the rights to query anything from the database, but what if we don't give our valid data in the database. Even if the administrators run a query to find any leakage, they won't be able to find out if the data is valid or not. That leads us to the next idea of adding noise to the inputs.

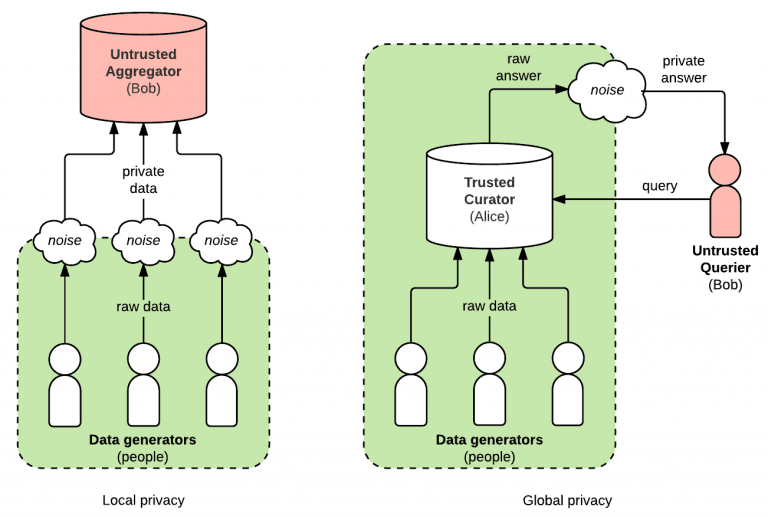

1) Local differential privacy

- With this method, users share their data and add it to the database. It is more secure, but in case the database administrators, not as trustworthy.

- Adding noise before appending to the database.

2) Global differential privacy

With this method, the database administrator adds noise to the information that he/she has in the database. If the database administrator is not trustworthy, there are chances of privacy leakage.

Adding noise before extracting the information from the database

A trusted curator is the owner of the database upon which the global differential privacy is applied. They are trusted to apply differential privacy correctly.

3) Randomized response

This is a technique used in social sciences when trying to learn about the high-level trends for taboo behavior.

Let's understand this by performing a social(thought) experiment.

Imagine, we are conducting research in our neighborhood, to find out how many people have jaywalked before. So, we decide to ask every person in our neighborhood, if they had jaywalked before, and they must reply with a binary answer (yes/no). Since they may not tell us the truth, we can follow the steps below.

- Flip a coin two times

- If the first coin flips heads, we assume they answered honestly to the yes/no question

- If the first coin flips tails, we record the answer according to the second flip

Some people do not wish to disclose their private information to the public, therefore the questions posted by the social scientist must be formatted as such that the people are forced to answer the question honestly.

This is known as plausible deniability, it is a condition in which a subject can safely and believably deny knowledge of any particular truth that may exist because the subject is deliberately made unaware of said truth to benefit or shield the subject from any responsibility associated with the knowledge of such truth.

Below we have a code snippet that shows the implementation of plausible deniability:

1import torch # For installation, refer this https://anaconda.org/pytorch/pytorch2

3# Number of entries in the sample database4num_entries = 50005# Generating random numbers6db = torch.rand(num_entries) > 0.57

8def query(db):9 true_result = torch.mean(db.float())10 # Mean on the original database11 first_flip_coin = (torch.rand(0,len(db)) > 0.5).float()12 # Finding the first coin flip13 second_flip_coin = (torch.rand(0,len(db)) > 0.5).float()14 # Finding the second coin flip15 augmented_database = db.float() * first_flip_coin + (1 - first_flip_coin) * second_flip_coin16 # Removing the skew in the second coin flip17 db_result = torch.mean(augmented_database.float()) * 2 -0.518 return db_result,true_result19

20db,_ = create_databases(5000)21db = torch.Tensor(db)22private_result,true_result = query(db)23print("With Noise: ",private_result)24print("Without Noise: ",true_result)Output:

1With Noise: tensor(0.4880)2Without Noise: tensor(0.4892)The code above shows a demonstration on how randomized response noise can be added to perform plausible deniability. On adding noise, we can reduce the sensitivity of the query (reducing the data leakage)

Differential privacy always requires some noise added to the queries to protect from differential attacks

Differential privacy in real life

1) Google and Apple

Tech giants like Google and Apple use it in every one of their services. For example, they collect your web searches or purchase history to improve your recommendations.

2) Hospitals

Hospitals collect personal data from several patients. Now, let's say we decide to predict if a person has diabetes based on symptoms that other patients had. We must ensure that we must never leak information about any patient while working on our prediction models.

There are several such use-cases, where differential privacy is used. In the near future, we will find this everywhere.

Conclusion

We have had an overview of what differential privacy is, and its importance in helping us preserve privacy. This blog serves only as an introduction to differential privacy, more about it can be found in the referenced articles below. To best understand the concepts, we must try implementing them, this link would be a good starting point.

To summarize:

- We learned the importance of privacy.

- We learned what differential privacy is.

- We implemented privacy leakage in databases, to prove the need for differential privacy.

- We studied various methods to resolve data leakages.

- We went over a few use-cases of differential privacy.

Further reading

- https://arxiv.org/abs/1910.02578

- https://arxiv.org/abs/1911.00222

- https://medium.com/sap-machine-learning-research/client-sided-differential-privacy-preserving-federated-learning-1fab5242d31b

- https://www.comparitech.com/blog/information-security/federated-learning/

- https://towardsdatascience.com/ai-differential-privacy-and-federated-learning-523146d46b85

- https://blog.cryptographyengineering.com/2016/06/15/what-is-differential-privacy/

Peer Review Contributions by Saiharsha Balasubramaniam