An Introduction to KNN Algorithm

— Technical, Machine Learning — 4 min read

In this article, we will learn about a supervised machine learning algorithm called K-Nearest Neighbors (KNN). This algorithm can be used for tasks like classification and prediction.

By the end of this article, you will get an overview of various supervised machine learning algorithms, what the KNN algorithm is, how it works, and also learn to build the algorithm from scratch. As a prerequisite, a little understanding of machine learning and Python would help beginners.

Table of contents

Supervised learning

According to Wikipedia, Supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs. It infers a function from labeled training data consisting of a set of training examples.

In simpler words, it can be said that we are teaching the machine how to predict or classify by showing some samples (training and testing) of the possible predictions or classifications.

We use training data with features and labels, to enable the machine to learn first. Later, it is being validated or tested using the test data. Thereby, this method of learning is called supervised learning.

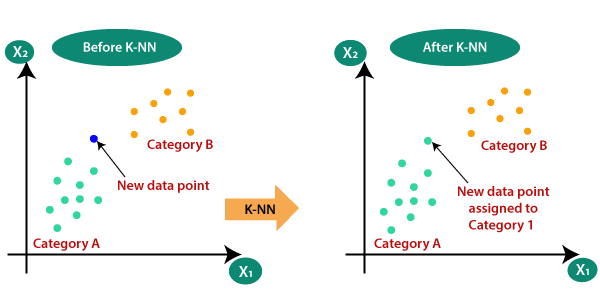

K-Nearest Neighbors algorithm

K-Nearest Neighbors (KNN) algorithm is one such supervised learning method that can be used for classification and regression.

Classification refers to a predictive modeling problem where a class label is predicted for a given example of input data. For example, classification of an animals as cat or dog, emails as spam or not.

In classfication, the prediction values are discrete values like 0 or 1, which relates to true or false. There can be multi-variate (more than one label) classifications as well.

Whereas, regression is another type of problem, that requires prediction of continuous values. For example, if we want to predict the approximate value of a share in the stock market, we will have to use regression.

Steps followed in KNN algorithm:

- Load the training and testing datasets.

- Specify or choose the value of

K. - For each point on the test data perform the following:

- Calculate the distance between the point and each point of the training dataset. We can use Euclidean distance or Manhattan distance.

- Sort the values in ascending order based on distances.

- Find the top K values from the sorted list.

- Find the frequency (Mode) of the labels of the top K values.

- Assign the mode to the test data point.

- The assigned value is the classified or predicted value for the particular test data point.

To understand the algorithm mathematically, you could refer to this article.

Image source: KNN algorithm shown visually

Now, we will learn how to build the above steps from scratch.

Step by step guide

Let's learn to build the K-Nearest Neighbors algorithm from scratch. Though, we use certain libraries like sklearn containing pre-defined methods to classify or predict using the KNN algorithm; learning to build it from scratch would help grasp us the concepts better and more effectively.

Objective

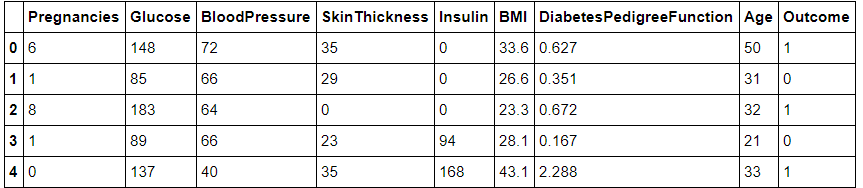

As a demonstration, we will be making use of a Diabetes dataset, which contains various parameters to classify if a person has diabetes or not.

You can download the dataset here.

A sample screenshot of the dataset is shown below:

Screenshot of diabetes dataset

Installation

For building the KNN algorithm from scratch, you can install any version of Python above 3.0.

To download Python, please use this link.

On installing Python, you have to install the following libraries:

You can install the above libraries using the following commands:

- Numpy using

pip install numpy. - Pandas using

pip install pandas. - Operators using

pip install pyoperators. - Statistics using

pip install statistics.

Import libraries

On setting up the environment, let's start coding!

First, you'll have to import those necessary libraries.

1import pandas as pd2import numpy as np3import operator4from statistics import modeRead the dataset

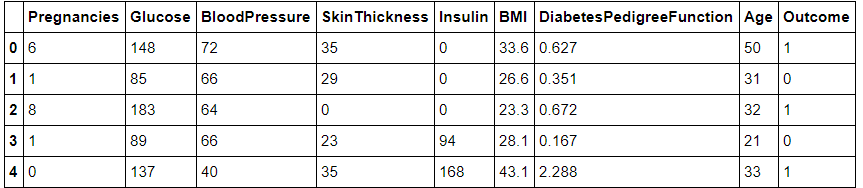

Now, you have to import the dataset and read it by using a function called read_csv(). This function takes the CSV file as an argument and returns a data frame.

1#col_names = ['pregnant', 'glucose', 'bp', 'skin', 'insulin', 'bmi', 'pedigree', 'age', 'label']2data = pd.read_csv("diabetes.csv")

First 5 rows of the dataset

Build utility functions

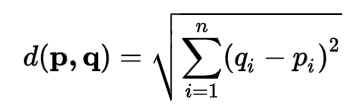

Find Euclidean Distance

In the KNN algorithm, we use Euclidean distance to find the distance between any two points. Euclidean distance is the squares of differences between any two points.

The formula for Euclidean distance is:

The formula for Euclidean distance

Alternatively, we can use other distance measures like Manhattan distance or Absolute distance.

1def euclidean_distance(x, y, length):2 # Dictionary to keep track of coordinate as key, with distance as the value3 sum_squared_distance = {}4 for i in range(len(x)):5 sum = 06 sum += np.square(x[i][0] - y[0]) # Square of differnce in x-axis7 sum += np.square(x[i][1] - y[1]) # Square of differnce in y-axis8 sum_squared_distance[i] = np.sqrt(sum)9 return sum_squared_distanceSorting dictionary based on the value

A function to accept a dictionary as an input argument and return a sorted dictionary based on values.

A Python dictionary contains a "key-value" pair. When we use sort() for a dictionary, by default it gets sorted based on the "key".

Since we want sorting based on "value", we will have to use a custom sorting method, as shown below:

1# Sort the items in the dictionary of the calculated distances 2def sort_distance(distance): 3 sorted_d = sorted(distance.items(), key = operator.itemgetter(1))4 return sorted_dHere, the items() function returns a list of tuples containing "key-value" pairs. And, here we use the key for sorting, as the "Key".

Find top K values

In KNN, the K value specifies the number of maximum nearest neighbors used for classifying or predicting.

Here, we have to find the top K values.

To do so, we make use of the following function:

1# Find the Top K classes from the sorted items2def top_k_dictionary(sorted_d):3 top_k = {}4 for i in range(k):5 top_k.update(sorted_d[:k])6 return top_kHere, [:k] is a slicing function used in Python, that helps us to fetch the first k values starting from 0. This is used as a shorthand notation for [0:k].

Classification using mode

On finding the top K elements, we use mode to find the possible classification outcome. Mode is a statistical measure, that tells us the maximum occurrence of a particular value.

1# Find the mode of the Top K class and it is the prediction2def top_k_class(query, top_k, data_dict_train, data_dict_test): 3 final_top_k = []4 for i in top_k:5 final_top_k.append(data_dict_train[i][0])6 print(query," Prediction is ",data_dict_test[mode(final_top_k)])The code snippet above prints the possible predictions for the query.

Build the KNN function

Having built the utility functions, now we'll have to make use of them to build our actual function for finding the K-Nearest Neighbors.

1# Find the prediction of the query2def knn(data,query,k):3 distances = {}4 sort = {}5 length = 26 data_dict_train = {} # Dictionary to hold train data7 data_dict_test = {} # Dictionary to hold test data8 for i in range(len(data)):9 data_dict_train[i] = data[i]10 data_dict_test[i] = y[i]11 12 distance = euclidean_distance(data, query, length) # returns a dictionary of euclidean distances13 sorted_d = sort_distance(distance) # returns a sorted dictionary based on value14 top_k = top_k_dictionary(sorted_d) # finds the top K elements15 top_k_class(query, top_k, data_dict_train, data_dict_test) # prediction functionBuild the main function

Now, let's build a main() function which calls the knn() function by passing the required features and labels, along with some sample queries to demonstrate classification.

1# Pass the input and output lists 2def main():3 x = data[['Pregnancies','Glucose','BloodPressure','SkinThickness','Insulin','BMI','DiabetesPedigreeFunction','Age']].values # Features4 y = data.iloc[:,-1] # Labels5 k = 3 # Specifying the value of K6

7 query = [[0,100],[5,100]] # 2 possible test data query8 for i in query:9 knn(x, i, k)10

11if __name__=='__main__':12 main()Output:

1[0, 100] Prediction is 12[5, 100] Prediction is 0Conclusion

We learned about a supervised learning algorithm called the K-Nearest Neighbors. We also built the KNN algorithm from scratch.

Full code for the KNN algorithm from scratch can be found here.

To learn more about the pre-built KNN algorithm, refer sklearn documentation page.

To summarize:

We learned about supervised learning.

We understood the core concept of K-Nearest Neighbors and its applications.

We learned how to work build a KNN algorithm from scratch.

Happy coding!

Further reading

Peer Review Contributions by: Saiharsha Balasubramaniam